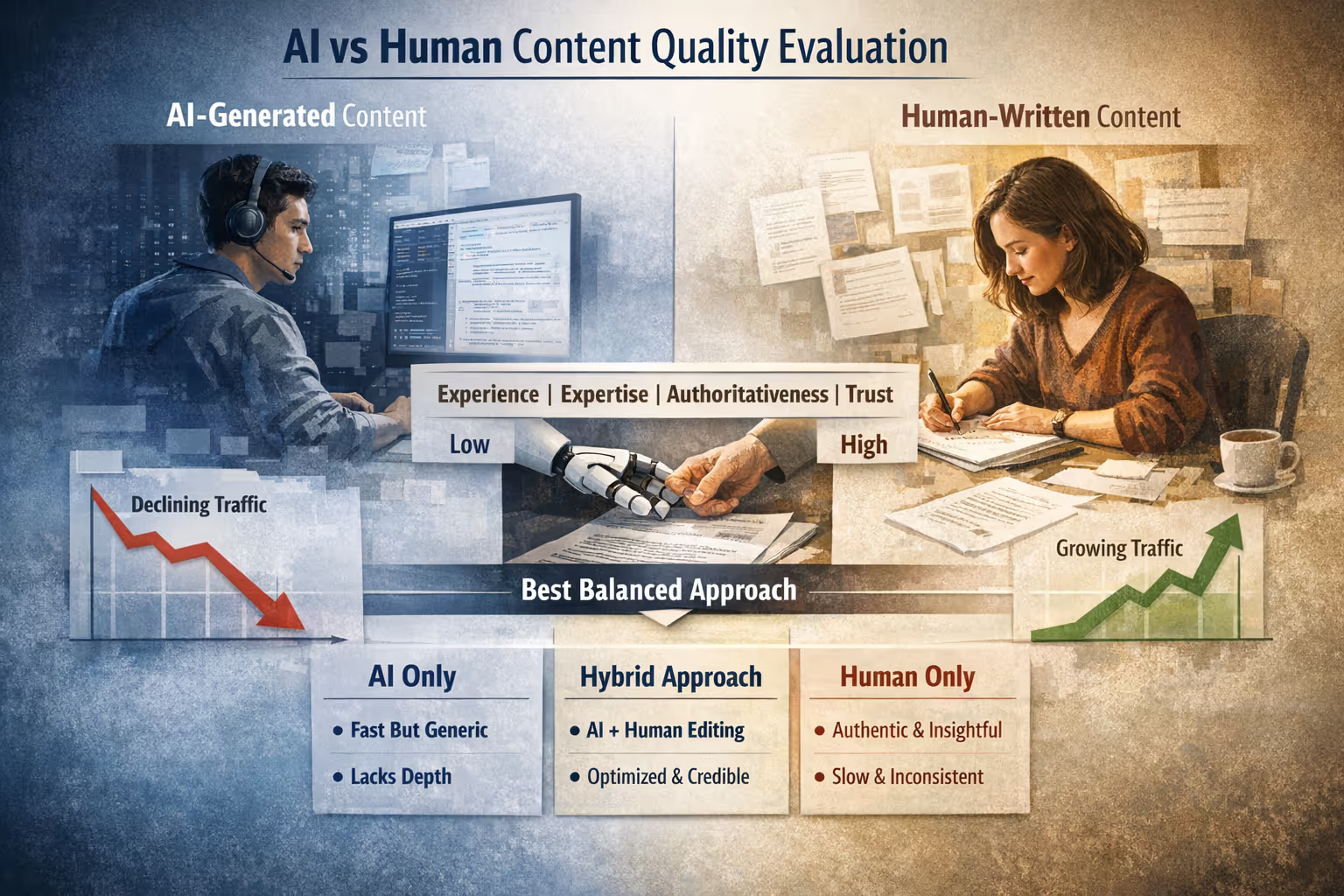

The debate feels settled—until you actually look at what's ranking.

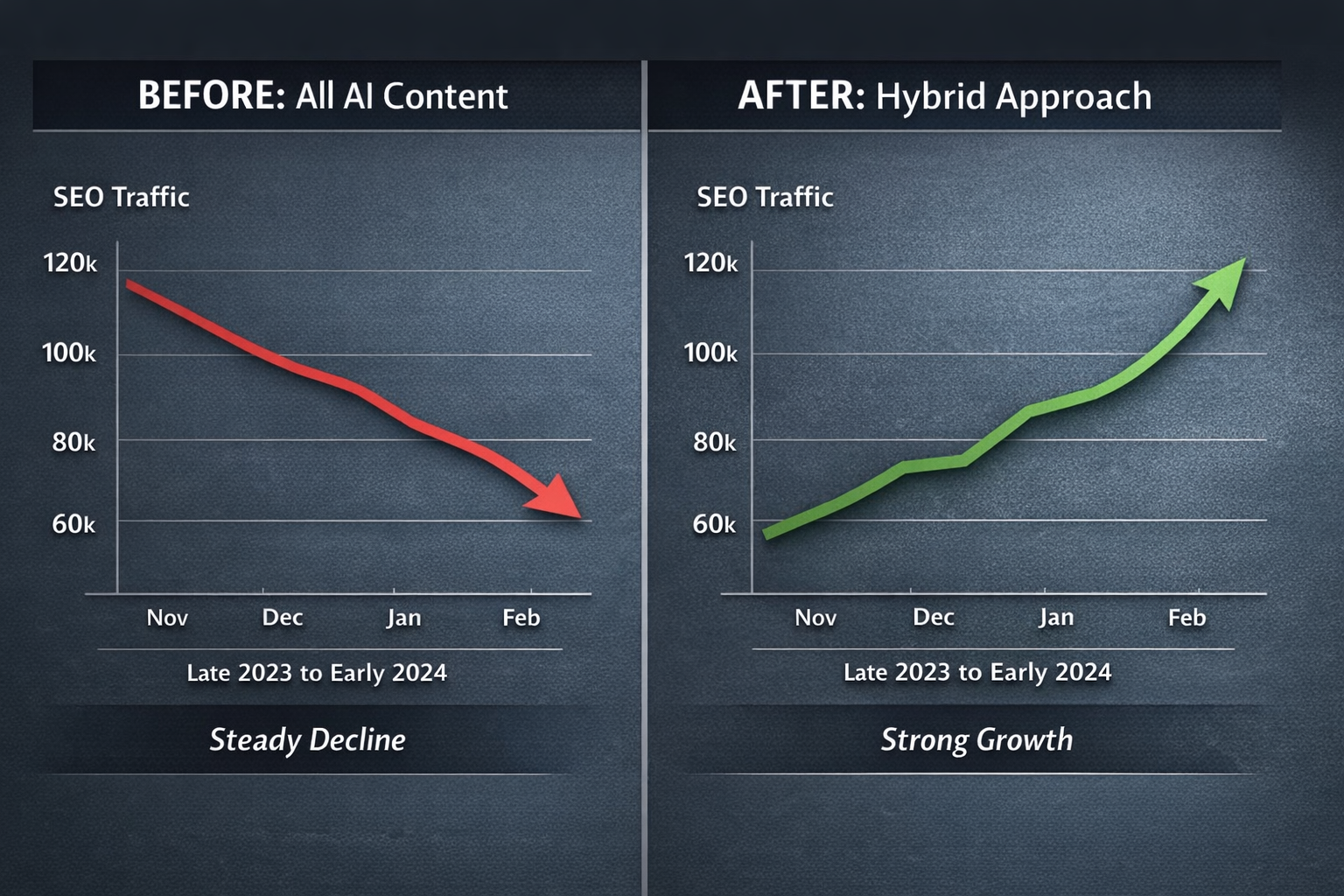

Last month, we analyzed a client's competitor who publishes exclusively with AI. Their traffic graph looks like a slow leak: steady decline since late 2023. Meanwhile, another competitor—one human writer, two posts per month—has climbed 40% in the same period.

But here's the twist: a third competitor using a hybrid approach (AI drafts, heavy human editing) outpaced both. By a lot.

The "AI vs human" framing misses the point entirely. The question isn't which is better. It's how you combine them—because the brands building sustainable organic traffic aren't choosing sides. They're designing systems that capture the strengths of each while compensating for the weaknesses.

This guide shows you exactly how to find the right balance between AI and human content for SEO—with specific examples, before-and-after comparisons, and the workflow we use at The Mighty Quill.

Why the AI vs Human Content Debate Is the Wrong Question

Most conversations about AI content versus human content assume you're picking a team. Team AI promises speed and scale. Team Human promises depth and authenticity.

Search engines don't care about your team.

They care whether content helps the person searching. Google's helpful content guidelines emphasize experience, expertise, authoritativeness, and trustworthiness—not the tool used to create the content [1]. The algorithm evaluates what's on the page, not the process behind it.

Here's where this gets practical:

Purely AI-generated content often lacks the nuance, lived experience, and original insight that makes content genuinely useful. It can hit surface-level quality signals while missing the deeper markers of expertise.

Purely human-written content struggles to maintain publishing consistency. Most companies that commit to "only human writers" publish sporadically—then wonder why their organic traffic flatlines.

The solution isn't philosophical. It's operational: design a workflow that captures AI's efficiency and human expertise simultaneously.

What AI Content Does Well (And Where It Falls Short)

AI writing tools have improved dramatically. ChatGPT, Claude, and similar large language models can produce grammatically correct, well-structured content in seconds. For certain tasks, that speed changes everything.

Where AI genuinely helps:

Research synthesis — Tools like Perplexity can summarize information from multiple sources into coherent starting points. Instead of spending two hours gathering background information, you spend fifteen minutes reviewing and refining what the AI compiled.

First-draft generation — Claude excels at turning a detailed brief into a structured draft. The blank page problem disappears. You're editing instead of staring.

Structural consistency — AI follows templates reliably. If you need 50 product descriptions that all hit the same format, AI handles this faster than any human team.

Scaling production — This is the real unlock. A single editor working with AI can produce more publishable content than a team of three writers working without it.

Where AI consistently struggles:

Original insight — AI can only remix what already exists. It cannot share lessons from a campaign it ran, a client conversation that shifted its thinking, or a failure that taught something unexpected. It infers patterns; it doesn't know things.

Voice and personality — Raw AI output tends toward a predictable rhythm: same sentence lengths, same transitions, same "helpful assistant" tone. Without intervention, it sounds like everyone else's AI content—because it basically is.

Factual accuracy — Hallucinations remain a serious risk. AI confidently states statistics that don't exist, attributes quotes to people who never said them, and invents case studies from thin air [2]. Every claim requires verification.

Emotional resonance — The subtle rhythms that make writing feel human—the well-placed fragment, the moment of vulnerability, the joke that lands—these get smoothed away in AI's pursuit of grammatical correctness.

The pattern is clear: AI handles the mechanical parts of writing efficiently. The creative, experiential, trust-building elements still need human involvement.

What Human Writers Bring That AI Can't Replicate

Human writers carry something AI fundamentally lacks: lived experience.

When a marketing director writes about scaling content operations, they draw on actual campaigns, real failures, and lessons learned through trial and error. That context shows up in specific ways:

The example that isn't from a "top 10" listicle, but from something they actually tried

The caveat they add because they've seen the edge case where the standard advice fails

The confidence that comes from knowing rather than inferring

Human writers deliver:

First-hand expertise — Not "here's what experts say" but "here's what I did, what happened, and what I'd do differently." This is the foundation of Google's E-E-A-T framework—the first "E" stands for Experience [1].

Original perspectives — Fresh angles that haven't been synthesized from existing content. The insight that connects two ideas no one else has connected. The contrarian take backed by evidence.

Emotional intelligence — Understanding what readers actually feel, fear, and want. The ability to acknowledge difficulty without being discouraging. The instinct for when to push and when to reassure.

Brand voice consistency — The subtle personality that makes content recognizable. Your company's point of view, not a generic "helpful" tone.

Critical judgment — Knowing what to include, what to cut, and what needs more depth. AI produces everything at medium depth; humans know when to go deep and when to stay concise.

These qualities directly support the expertise signals that Google uses to evaluate content quality. Search engines increasingly reward content that demonstrates genuine expertise—something AI alone cannot manufacture.

How to demonstrate experience in your content:

Use "I" statements when sharing direct observations: "I've tested this across dozens of campaigns" carries more weight than "many marketers have found."

Reference specific situations: project types, client conversations, unexpected results.

Acknowledge limitations and edge cases—AI tends toward false confidence; humans can show nuanced understanding.

Share the reasoning behind recommendations, not just the recommendations themselves.

The Hybrid Approach: A Step-by-Step Workflow

The most effective content strategies treat AI as a tool within a human-led system. Think of it as AI-assisted, human-directed content creation.

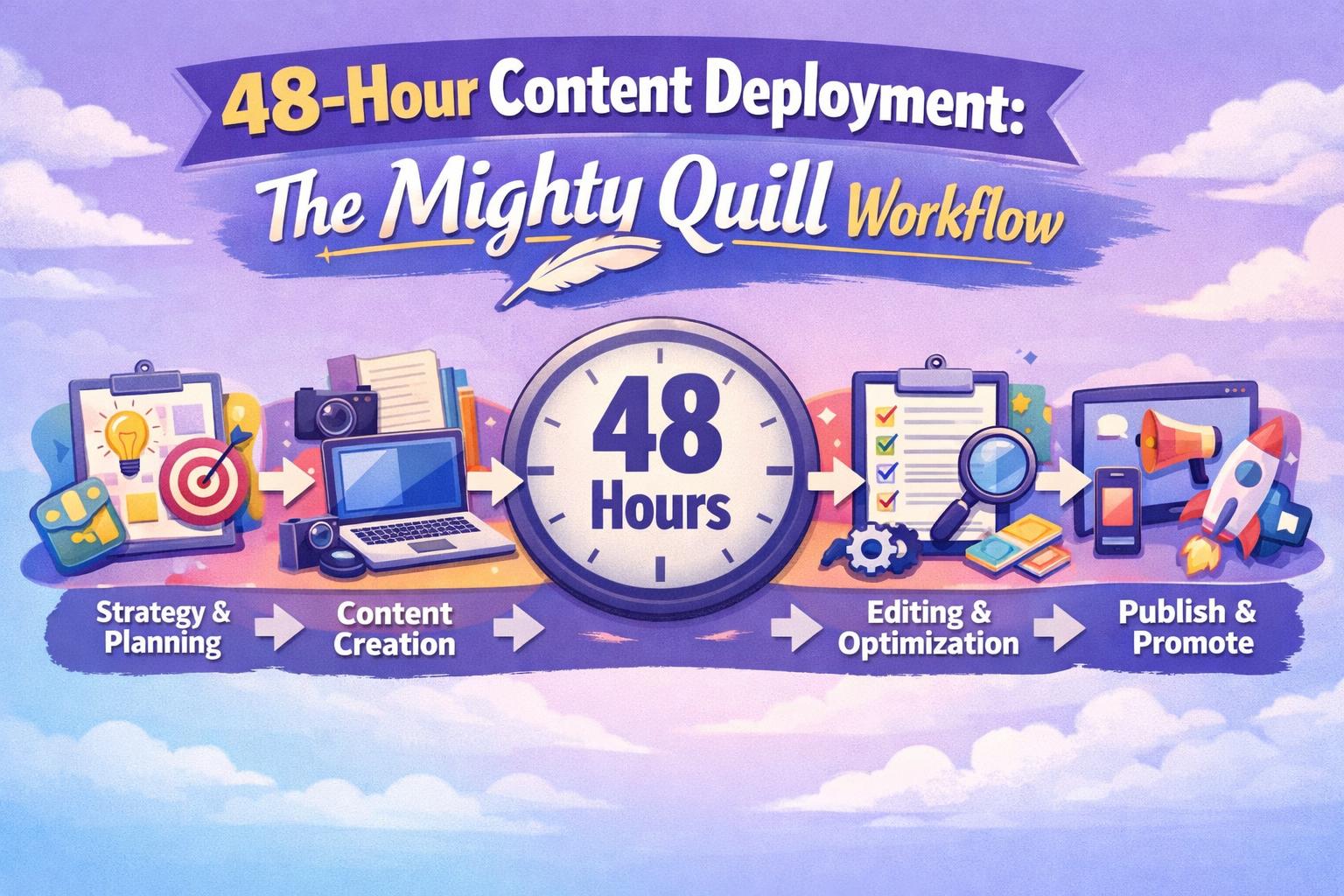

Here's the specific workflow we use at The Mighty Quill:

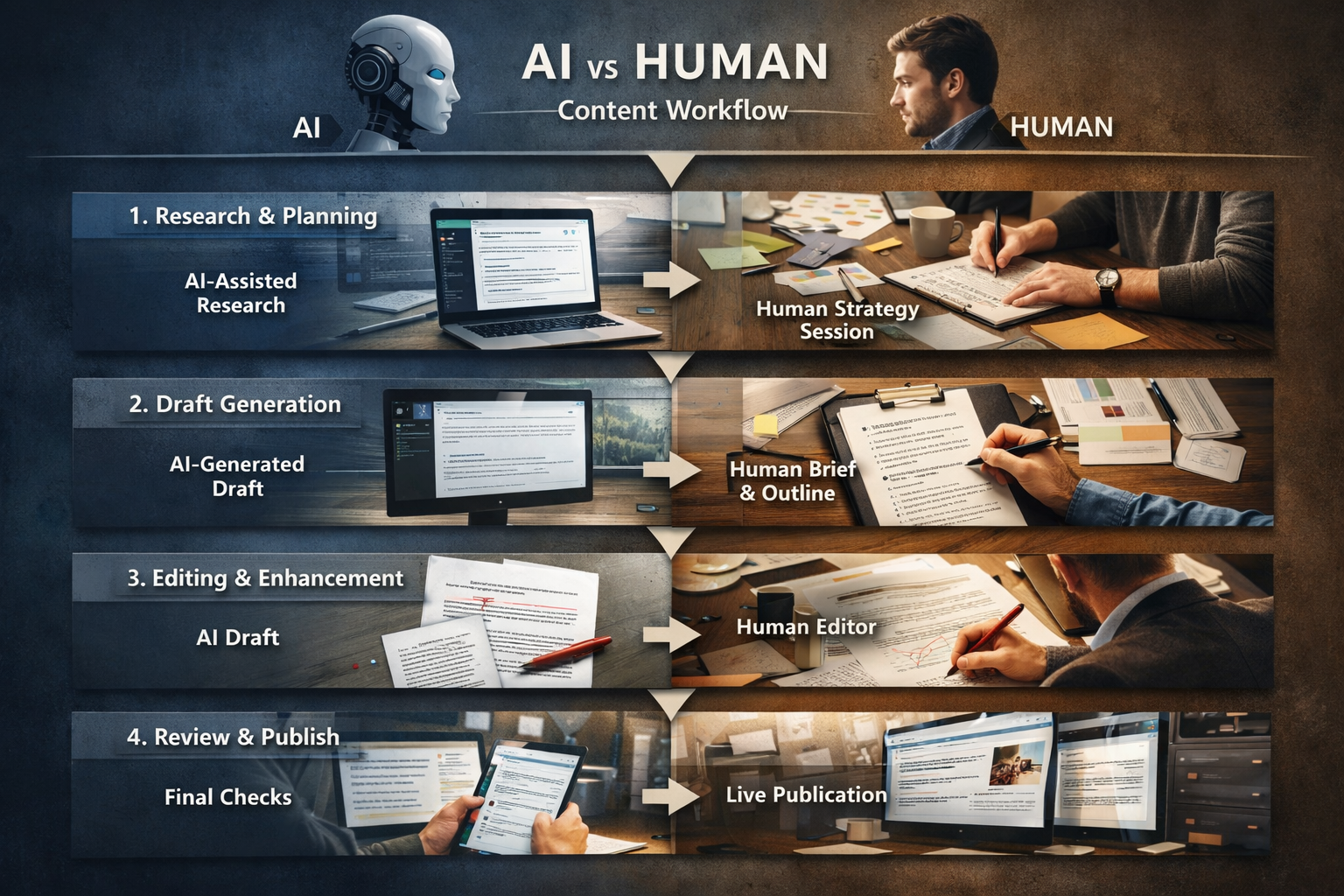

Stage 1: Research and Planning (AI-Assisted, Human-Directed)

What AI does:

Use ChatGPT or Perplexity to compile background research on the topic

Generate a list of questions the target audience likely has

Identify related topics and potential subtopics

Pull together competitive angle analysis

What humans do:

Review AI research for accuracy and completeness

Prioritize angles based on strategic goals and audience understanding

Identify gaps where original insight will be required

Make final decisions about structure and emphasis

Time investment: 20 minutes AI-assisted vs. 90+ minutes fully manual.

Stage 2: First Draft Generation (AI-Driven, Human-Briefed)

What AI does:

Produce the initial draft following a detailed brief

Include structure, key points, and supporting information

Generate multiple options for introductions or difficult sections

Critical input: The brief matters enormously. Vague prompts produce vague content. Specific briefs—with target audience, desired tone, key points to cover, examples to include, and angles to avoid—produce drafts that require less revision.

What humans prepare in the brief:

Specific audience (not "marketers" but "marketing directors at B2B SaaS companies with 20-100 employees")

Tone and voice guidelines (with examples)

Points that must be covered

Original insights or data to incorporate

Examples of what good looks like

Stage 3: Human Editing and Enhancement (Human-Led)

This is where the transformation happens. The AI draft is raw material, not finished content.

The human editor:

Injects original insight and first-hand experience

Adjusts tone for brand voice consistency

Verifies every factual claim and removes hallucinations

Adds specific examples, case studies, and nuance

Restructures sections that don't flow

Cuts generic filler and tightens the argument

Editor's checklist:

[ ] Does every claim have a source or personal experience backing it?

[ ] Are there at least 2-3 specific examples (not generic hypotheticals)?

[ ] Does the introduction hook with something concrete?

[ ] Is there original insight that couldn't be found elsewhere?

[ ] Does the voice sound like our brand, not a generic assistant?

[ ] Would I be proud to put my name on this?

Stage 4: Quality Assurance (Human-Verified)

Final review ensures accuracy, checks SEO optimization (internal links, meta descriptions, schema), and confirms the content meets editorial standards before publishing.

Verification steps:

Fact-check all statistics and quotes against original sources

Confirm all links work and point to appropriate pages

Review for any remaining AI-isms (see readability tuning below)

Test readability on mobile

Final approval from subject matter expert when possible

Readability Tuning: From Generic AI to Human-Quality Flow

Raw AI output often reads like... well, AI output. It's technically correct but somehow flat. The sentences flow predictably. The vocabulary feels safe. Personality is absent.

This is where readability tuning becomes essential.

Before and After: AI Voice vs. Human Voice

AI Draft (Before):

"When creating content for SEO purposes, it is important to consider the balance between quantity and quality. Many businesses struggle with this balance, as they want to publish frequently but also maintain high standards. Finding the right approach requires careful planning and consistent execution."

Human-Edited (After):

"Here's the trap: you know you need to publish consistently, but every time you rush a piece out, you wonder if you're damaging your credibility. The quantity vs. quality debate isn't actually a debate. It's a systems problem. Solve the system, and both improve."

What changed:

Removed hedging language ("it is important to consider")

Added direct address and specificity

Introduced tension (the trap)

Offered a perspective, not just information

Varied sentence length dramatically

Specific Readability Adjustments

Vary sentence length aggressively.AI tends toward 15-20 word sentences, consistently. Mix in fragments. Then longer explanatory sentences when needed. Short sentences punch. Long ones let you develop complexity with appropriate nuance and room to breathe.

Replace transitions with conversational bridges.AI loves "Furthermore," "Additionally," "Moreover." Humans say "Here's the thing." Or "That said." Or simply start the next thought without a transition at all.

Add specificity wherever possible.AI says "many companies." You say "a B2B SaaS client we worked with last quarter." AI says "significant improvement." You say "43% increase in organic sessions over 90 days."

Embrace intentional fragments.For emphasis. For rhythm. For the way people actually think.

Reduce hedging language.Find and kill: "might," "could potentially," "it's possible that," "tends to," "in some cases." Replace with direct statements or, when uncertainty is genuine, specific conditions ("This works well for informational content; transactional pages need a different approach").

Inject mild opinion.AI stays neutral. Humans have perspectives. "This approach works" versus "This approach is underrated—most teams skip it because it requires patience, but the compounding payoff is significant."

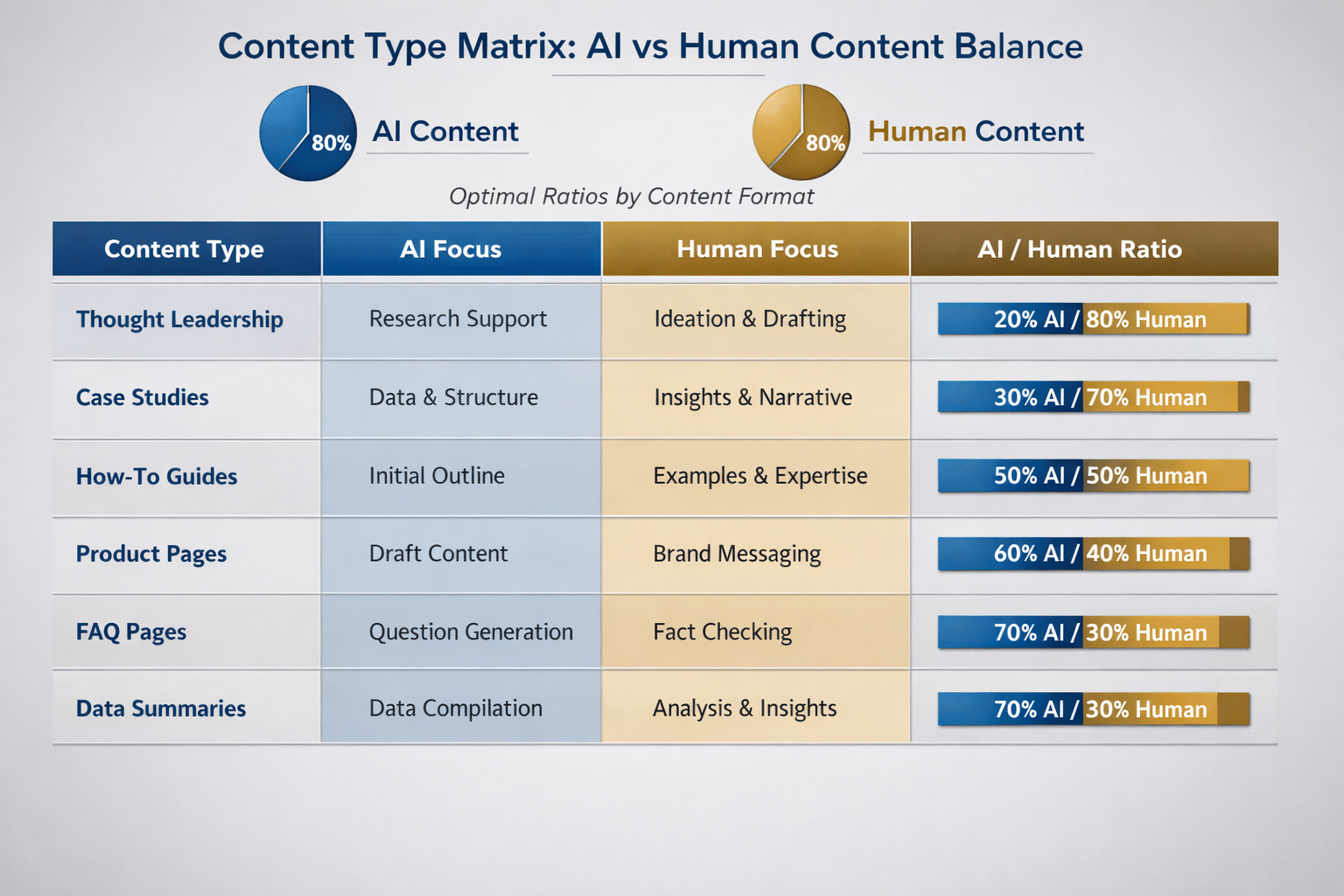

Content Type Matrix: Matching Approach to Purpose

Different content types warrant different human-to-AI ratios. Treating all content the same wastes either human time or quality potential.

| Content Type | AI Role | Human Role | Recommended Ratio |

| Thought leadership | Research support only | Ideation, drafting, voice | 20% AI / 80% Human |

| Case studies | Structure, data formatting | Narrative, insights, lessons | 30% AI / 70% Human |

| How-to guides | First draft, steps outline | Examples, caveats, expertise | 50% AI / 50% Human |

| Product pages | Initial draft | Accuracy, brand voice, benefits | 60% AI / 40% Human |

| FAQ pages | First draft from common questions | Verification, voice, completeness | 70% AI / 30% Human |

| Data summaries | Heavy lifting on compilation | Insight, interpretation, quality check | 70% AI / 30% Human |

The principle: Content requiring original insight, personal experience, or strong brand differentiation needs heavier human involvement. Content that's primarily structural, informational, or templated can leverage more AI assistance.

Common Mistakes When Balancing AI and Human Content

Mistake 1: Publishing Unedited AI Output

This is the most common and most damaging error.

Unedited AI content doesn't just rank poorly—it damages brand perception. Readers recognize generic content, even if they can't articulate why. They leave faster. They don't share. They don't return.

How to fix it: Establish a minimum editing standard. No content publishes without at least one human pass that addresses: fact verification, voice adjustment, and addition of original insight. Build this into your workflow as non-negotiable.

Mistake 2: Over-Relying on Human Writers for Scalable Tasks

The opposite error: treating every piece like it requires hand-crafted artistry.

Some content types—product descriptions, location pages, data summaries, FAQ expansions—don't require deep human creativity. Using expensive human time for mechanical work limits your publishing capacity and burns out your team.

How to fix it: Audit your content calendar. Identify which pieces are "depth content" (requiring heavy human involvement) versus "coverage content" (primarily structural). Shift AI assistance toward coverage content to free human capacity for depth content.

Mistake 3: Ignoring Voice and Tone Guidance

AI doesn't understand your brand's personality intuitively. Without explicit guidance, it defaults to generic helpful-assistant tone—which makes your content indistinguishable from everyone else's.

How to fix it: Create a voice document with specific examples. Include: words you use, words you avoid, example sentences that capture your tone, and examples of what you don't sound like. Reference this document in every AI prompt.

Mistake 4: Skipping Fact Verification

AI presents false information with the same confidence as true information. There's no built-in signal that distinguishes "I'm certain about this" from "I'm making this up."

How to fix it: Treat every statistic, quote, date, and specific claim as unverified until confirmed. Build fact-checking into your editing process as a distinct step, not something you do "while editing." Use a simple system: highlight claims as you edit, then verify each one before moving forward.

Mistake 5: Expecting AI to Replace Strategy

AI executes. It doesn't strategize.

It can write content about topics you specify, but it can't determine which topics will move your business forward. It can produce volume, but it can't tell you whether that volume serves your actual goals.

How to fix it: Keep humans responsible for content strategy: topic selection, prioritization, audience understanding, and competitive positioning. Use AI only for execution within that human-defined strategy.

How Search Engines Evaluate Content Quality

Google's approach has evolved significantly. The helpful content system evaluates whether content demonstrates:

Experience — Does the creator have first-hand knowledge? This shows up in specific examples, personal observations, and the ability to address edge cases that only someone with real experience would know [1].

Expertise — Does the content show deep understanding? Not just surface-level coverage, but the ability to explain why things work, when they don't, and what alternatives exist.

Authoritativeness — Is the source recognized in its field? This connects to author credentials, publication history, and external signals like links and citations.

Trustworthiness — Is the information accurate and reliable? Factual accuracy, source citation, and transparent acknowledgment of limitations all contribute.

AI-generated content can technically satisfy surface-level quality signals. But demonstrating genuine experience—sharing specific lessons, acknowledging limitations, offering original perspective—requires human involvement.

The sites winning in competitive SERPs combine publishing consistency (where AI helps) with authentic expertise signals (where humans remain essential).

Building a Sustainable Content System

Finding the right balance isn't a one-time decision. It's an operational system that evolves with results.

Clear workflows — Defined roles for AI generation, human editing, and quality review. Everyone knows who's responsible for what at each stage.

Style guidelines — Documentation ensuring consistent voice across all content, regardless of who edits it or which AI tool generates the draft.

Fact-checking protocols — Verification steps built into the process, not bolted on afterward. Specific responsibility assigned for confirming claims.

Feedback loops — Performance data informing future content decisions. Which pieces performed? What do they have in common? How does that change what you create next?

Continuous calibration — Adjusting the AI-to-human ratio based on results. Some content types might shift toward more AI assistance over time; others might require more human involvement than you initially planned.

The goal is an engine that produces high-quality content consistently—without burning out your team or sacrificing the authenticity that readers and search engines reward.

Start Building Your Hybrid Content Engine

The AI vs human content debate ends when you stop choosing sides and start building systems.

AI makes consistent publishing possible. Human expertise makes that content worth reading. Together, they create something neither achieves alone: an organic traffic engine that compounds over time.

The brands gaining ground right now aren't the ones with the best AI tools or the most talented writers. They're the ones who've figured out how to combine both into a reliable system.

Ready to see what a hybrid content system looks like in practice? Try The Mighty Quill free— get 2 custom articles in 48 hours and experience how AI-assisted, human-quality content actually works.

Frequently Asked Questions

Is AI-generated content bad for SEO?

Not inherently. Google evaluates content based on helpfulness and quality, not how it was created [1]. AI content that's accurate, well-edited, and genuinely useful can rank well. The risk comes from publishing unedited AI output that lacks depth, contains errors, or reads generically—which hurts engagement metrics and trust signals. The tool matters less than the final quality.

How much human editing does AI content need?

It depends on the content type and quality standards. Thought leadership requires substantial human input—often 70% or more of the total effort. Straightforward informational content may need lighter editing focused on fact-checking, voice adjustment, and adding specific examples. The universal rule: never publish completely unedited AI drafts. Budget for meaningful human review on every piece.

Can Google detect AI-written content?

Google has stated it doesn't penalize content simply for being AI-generated [4]. However, AI content often exhibits patterns—generic phrasing, lack of original insight, predictable structure—that correlate with lower quality. The better question: Does your content demonstrate genuine expertise and provide real value? That matters more than whether Google can identify the authorship method.

What's the ideal ratio of AI to human involvement?

There's no universal formula. High-stakes content (case studies, thought leadership, cornerstone pages) benefits from 70-80% human effort. Scalable content (how-to guides, FAQ pages, product descriptions) can leverage more AI assistance—perhaps 60-70% AI—with focused human editing. Start with the content type matrix above, then let performance data guide adjustments for your specific situation.

How do I maintain brand voice when using AI?

Three requirements: detailed style guidelines, explicit prompting, and consistent human editing. Document your voice preferences with specific examples—not just "professional and friendly" but actual sentences that capture your tone. Include voice guidance in every AI prompt. And ensure your editors understand the brand voice deeply enough to adjust AI output toward it. Without all three, content will drift toward generic.

Our Approach to This Content

This article reflects The Mighty Quill's hands-on experience building hybrid content systems for growth-focused businesses. Our founder has spent over 15 years in digital marketing, testing what actually works for SEO and content operations. We combine AI efficiency with human editorial judgment—ensuring every piece demonstrates the expertise and authenticity that readers and search engines reward. The workflow described here is the same one we use for our clients.

Cited Works

[1] Google Search Central — "Creating helpful, reliable, people-first content." https://developers.google.com/search/docs/fundamentals/creating-helpful-content

[2] MIT Technology Review — "Why large language models hallucinate." https://www.technologyreview.com/2023/10/27/1082501/why-large-language-models-hallucinate/

[3] Search Engine Journal — "How User Engagement Metrics Impact SEO Rankings." https://www.searchenginejournal.com/user-engagement-seo/

[4] Google Search Central Blog — "Google Search's guidance about AI-generated content." https://developers.google.com/search/blog/2023/02/google-search-and-ai-content